Out of the Rain and into the Lab

by Kwabena Osei

The issue of water quality impairment has become topical in the last few decades, with the focus evolving from point source pollution in the 1970s to nonpoint source pollution in the 1990s. In addition, legislation requiring compulsory stormwater treatment at new development sites has received increased support as the Environmental Protection Agency, academics, environmental groups and the mainstream news media have highlighted the continued environmental impairment of our water bodies due to nonpoint source pollutants. Stormwater treatment regulations most commonly focus on the control of sediments and nutrients as these constituents continue to be the primary pollutants of concern threatening some of America's treasured natural resources, including the Chesapeake Bay and Great Lakes.

In an industry where alternative sediment and nutrient removal practices are increasingly sought, it is imperative that engineers and regulators know precisely how efficient various Best Management Practices (BMPs) are. For many years, field-testing has been the preferred method for evaluating structural stormwater treatment systems. However, many unsuccessful or inconclusive field-testing exercises have been carried out on structural BMPs over the past few years, leading scientists and engineers to conclude that field-testing is fraught with many obstacles and challenges that are difficult to eliminate, and could produce results that are not necessarily representative of the performances of both proprietary and non-proprietary stormwater management practices.

In the last decade or so, proprietary stormwater separators and filters have become integral to stormwater treatment as municipalities and developers install BMPs to meet stormwater permit requirements. Proprietary BMPs tend to have smaller footprints than conventional non-proprietary BMPs and are particularly suited for the urban environment where space is at a premium.

One of the most contentious debates to arise within the stormwater industry has been centered around how to assess the performance of proprietary systems. Performance verification, in theory, would determine how well a given proprietary system performs relative to a conventional BMP and how well it performs in comparison to other proprietary BMPs.

Typically, a BMP is sized for the flow rate at which it is expected to provide a certain percentage of sediment or nutrient removal. Most regulatory agencies require 80 percent removal of Total Suspended Solids (TSS) for sediments and anywhere between 40 and 60 percent removal for nutrients. Although the "percent removal" standard has been the primary means of BMP performance assessment, some within the industry argue that this method discounts the effects of parameters like particle size distribution, specific gravity, irreducible concentrations and temperature on the ability of a treatment BMP to provide adequate treatment. They suggest a better way to obtain pollutant control is to define an effluent concentration limit as is used in the wastewater industry.

Depending on regulatory agency requirements, treatment systems are tested using in-field or laboratory-based protocols, or both. Regulatory agencies often grant limited use of treatment systems based on laboratory data, but require field data before a treatment system can be considered for general use.

Advantages of Laboratory Testing

In general, consultants and regulators prefer performance data from the field over lab data because lab data is not collected under “real-world” conditions. This makes it difficult for engineers to specify treatment devices that have not been tested under the same flow and pollutant conditions as the site or region where such a system will be installed. Although this argument has merit, the easiest and most effective way to compare systems is to test them under the same conditions and this can only be effectively done in a controlled setting.

The primary advantage of laboratory testing is the ability to control the test variables, thus minimizing or eliminating most of the problems associated with field testing. Control is critical if similarities and differences between systems are primary objectives of the test program.

With lab testing, the flow rates and sediment particle size distributions to be used are determined beforehand, and because various storm scenarios can be simulated, researchers don't have to wait for rain to run a lab test. Results are obtained within a relatively short period of time, and changes can be made to the test set up, protocols, etc., without significantly increasing the cost or duration of the test program.

Laboratory test programs have the added advantage of allowing researchers to evaluate sediment resuspension, an increasingly common phenomenon observed in stormwater separators that have poorly isolated sediment storage zones. But most importantly, lab-based tests and results are repeatable, allowing for better statistical confidence in the data obtained.

Test Protocols Matter

Nonetheless, all lab testing is not the same, and all lab testing is not necessarily "good" science. In fact, data has shown that the manner in which samples are collected from lab pipe work can have as much of an impact on "device performance" (i.e., removal efficiency results) as the particle size distribution of the influent solids.

The commonly used laboratory test protocols are the Indirect and Direct Test methods. These protocols differ significantly in their approach to pollutant removal measurement: the Indirect method measures the difference in pollutant concentration between the inlet stream and outlet stream of a device; the Direct method bases device performance on the total mass of material fed into and captured within the test device.

Indirect Testing

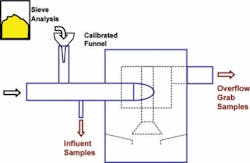

Indirect Testing is the most commonly used method for assessing "percent removal" in the lab. It involves capturing grab samples before the flow enters the test unit (influent) and after the flow exits the unit (effluent). Concentrated sediment slurry is dosed into the influent flow stream of clean water and samples are taken prior to the mixed flow reaching the test device. After flow exits the test device, grab samples are taken at the overflow (see Fig. 1a).

It's important to note that this method of testing is prone to error, depending on the sampling region within the influent stream, how well the slurry integrates into the influent stream, and the type of sampling port used. The efficiency of the test device is calculated based on its inefficiency, as is typically done in field studies, because the sediment captured within the device is not considered in the calculation.

Direct Testing

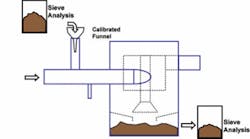

The Direct Test involves measuring the mass of sediment actually captured within the unit and comparing it to the known mass of material fed into the influent stream of the device over the duration of the test. Any losses in material during the drain down and recovery stage after a test will reflect in reduced device efficiency and, as such, will result in a biased estimate that errs on the side of caution.

A known mass of sediment is fed through the system at the required concentration and flow rate. After the test, the water in the device is decanted and the actual mass of sediment captured within the device is vacuumed out, dried and weighed (see Fig. 1b). A series of tests performed using this protocol have shown that removal efficiencies are very consistent from test to test.

With this method, the efficiency of the device is directly calculated based on the recovered sediment. A subsample can be taken from the sump material and a particle size gradation performed. The gradation analysis can be used to determine whether there have been shifts of the gradation from the original gradation. Based on that information, the particle size analysis shows the flow rates and gradations for which the device is effective for sediment removal and retention.

The drawback of direct testing is that it's not suitable for systems that make use of sediment trapping, like filters and infiltration systems. However, as separators primarily rely on particle settling, the Direct test method is probably the most accurate and conservative way of assessing separators.

Conclusion

While field testing provides valuable information on the performance of treatment systems under real world conditions, the problems encountered in setting up a test site and the numerous parameters that cannot be controlled in a field setting make it difficult to use as a means of comparing and contrasting the performance of stormwater treatment systems. Laboratory testing, however, provides a means by which the efficacy of treatment systems can be compared under the same conditions.

In selecting a protocol for laboratory testing, it is important to use test methods that best represent the efficacy of treatment systems. Assessment of the Direct and Indirect test protocols shows that the Direct test method provides a level of repeatability and consistency that is difficult to replicate for Indirect tests. The Direct test method also has the advantage of investigating particle size distribution and retention within devices, which is a key component of assessing device performance.

About the Author:

Kwabena Osei is the U.S. Research & Development Manager at Hydro International in Portland, Maine. He has authored nearly 20 technical papers on stormwater, wastewater and combined sewer overflow testing. He has a Masters degree in Civil/Environmental Engineering from the University of Vermont.

What does field-testing actually test?

In theory, field studies provide an opportunity to evaluate a system's performance under field conditions. This provides information that helps regulators and consultants assess whether the BMP provides the required level of control. However, there are many uncontrollable variables associated with field testing that cast a cloud of uncertainty over the integrity of the performance results and render it nearly impossible to compare the performance of BMP 1 tested at Site A to BMP 2 tested at Site B. Uncontrollable field variables include:

• Land use: Beyond broad considerations, it is difficult to determine the expected pollutant loadings from a site prior to starting any monitoring and sampling work. Different land uses not only present different challenges with respect to the treatability of pollutants but also often possess different environmental factors that can compromise treatment performance. For example, a land use that typically produces a large amount of organic, low-specific-gravity debris can be much more challenging for a sedimentation device than a land use that typically produces inorganic, high-specific-gravity sediment. Considerable time can be spent "shopping" for a site with the "right" characteristics, such as a high loading rate of coarse sediment, in order to increase the odds of obtaining favorable percent removal results.

• Geography: The climatic conditions in a locale also have a significant bearing on removal efficiency. Whereas some areas see large volumes of rainfall annually, other areas see very little rainfall, leaving more time for piles of sediment to build up on the site prior to the next rain. Rainfall intensities also can vary significantly between locations, making it difficult to compare data from different locations when assessing different devices.

• Sampling equipment: Much has been written about monitoring equipment currently used to sample stormwater flows. There are errors associated with flow meters that are hard to discern, let alone resolve. The inability of samplers to capture the entire spectrum of solids skews data towards the finer fraction of sediment, which in turn has a negative effect on the reporting of system performance. Sampling equipment also cannot adequately capture a representative cross section of flow within a pipe. Many times, more favorable "percent removal" results can be obtained by putting the sampling tube in a "better" location within the pipe.

• Resources: The time and effort required to go through a full program of field-testing can be in excess of two years. Agencies often require that test data be collected for an entire year in order to account for seasonal variations in rainfall. Even then, some storms may have to be discarded from the data set if they do not meet certain qualifications such as rainfall depth and duration. In addition, equipment breakdown during a storm can often occur, leading to the loss of more test data. This results in a significant commitment in terms of human and financial resources. If the time required for site selection, site preparation, equipment set-up and pre-monitoring and de-commissioning of the system are all factored in, it could be many more years before data is available for analysis. The financial cost of field-testing stifles innovation. Once a manufacturer has field tested its proprietary BMP and has it approved, the manufacturer has absolutely no incentive to modify the product for increased performance when his reward for doing so is to repeat the same expensive field-testing program all over again.